- Ophthalmology Times, April 15 2019

- Volume 44

- Issue 7

Artificial intelligence gains more acceptance in ophthalmology

Artificial intelligence has the potential to have a significant impact on ophthalmology in the coming years.

Artificial intelligence can provide a knowledge base that can be a foundation for the interpretation of data. The importance of the humanistic elements of medicine remain vital.

Artificial intelligence (AI) is a technology in which machines and equipment can “learn” from experience and adjust accordingly.

The technology has the potential to have a significant impact on ophthalmology in the coming years, according to Dimitri Azar, MD, MBA.

Three categories of algorithms exist for AI and machine learning:

- Unsupervised learning, which groups data that has not been labeled and includes methods such as clustering,

- Supervised learning, which infers a function from labeled training data and maps an input to an output based on example input-output pairs, includes linear regression analysis, support vector machine analysis, decision trees and random forests, and convoluted neural networks and deep learning, relying heavily on labeled data,

- Semi-supervised learning, which uses mostly unlabeled data with a small amount of labeled data.

In ophthalmology, the neural network approach, deep learning, has surpassed other methods in recent years, said Dr. Azar, senior director of ophthalmic innovations, and clinical lead of ophthalmology, A

It requires a level of computing that was not available to most researchers in the past, so other approaches were more likely to be used. With today’s increased access to big data and analytics, there has been a plateau in the traditional methods, and deep learning has surpassed it simply because it requires more analytical abilities, Dr. Azar said.

“One thing about these learning networks that I was impressed with is that as the training commences, neural networks start off without any fine tuning, and return random results,” he said. “The neural network progressively learns the combinations and permutations of important features.”

A difference between neural networks and human beings is humans have trouble letting go of inaccurate information that was thought to be useful in the past. The convoluted neural networks, as they progressively learn combinations and permutations of the important features, learn to ignore the unimportant features in order to make better algorithms, he noted.

Neural Network Process

The process for networks features a weight given to particular features-the features the network finds most important are given the most weight.

As the network learns more, these weightings shift, so a feature that early on was thought to be important will be given less weight, as other features are given more weight. This allows the networks to make more accurate predictions.

The algorithm-generation process begins with an input layer. Before there is any output, there are several hidden layers. While the applications operate like black boxes, the results are not always given with an explanation. This makes detection of inappropriate outcomes difficult.

As algorithms become more powerful, the methods for troubleshooting them may lag behind. This can be a potential limitation of this approach, and may have implications in obtaining approval.

AI in the Literature

Dr. Azar did a literature search on the use of AI in ophthalmology, in the topics of AMD, diabetic retinopathy, retinopathy of prematurity, dry eye, keratoconus and corneal topography, glaucoma and visual fields. The search showed that from 2016 through the first half of 2018, a period of 30 months, there were about twice as many publications as in the 60 months from 2006 through 2010.

AMD and diabetic retinopathy were the most frequent topics found in the search. There are AI applications in corneal topography (some of which stem from his early work with Dr. Paul Lu), and dry eye as well. He proposes future studies using AI that could simplify the diagnosis and classification of different types of dry eye, and their severity, potentially replacing the current methods that are based on the DEWS II report.

In glaucoma there are studies utilizing deep learning. Some look at the nerve fiber layer, and others focus on the optic nerve. Future studies will include using OCTA to examine blood flow in the retina, adjacent to the nerve.

Connecting the data

When it comes to AI, a major problem in glaucoma is that the data comes from multiple sources, which frequently do not connect with each other. There may be billing data, patient information, imaging data, etc., all coming from different sources. While it would seem easy to connect these sources, doing so in a HIPAA-compliant way is not always easy.

A group at the University of Illinois has developed a machine learning method to collect the data that comes from various diagnostic tools and records, under one name or one eye, staying within HIPAA regulations.

Currently, most physicians look at data from multiple sources to make a diagnosis. The time-consuming visual field test is probably the most reliable way of detecting whether or not someone is progressing.

By linking data together, with AI applications it should become easier to find shortcuts that can predict what will happen in glaucoma, like current trends in diabetic retinopathy, he said.

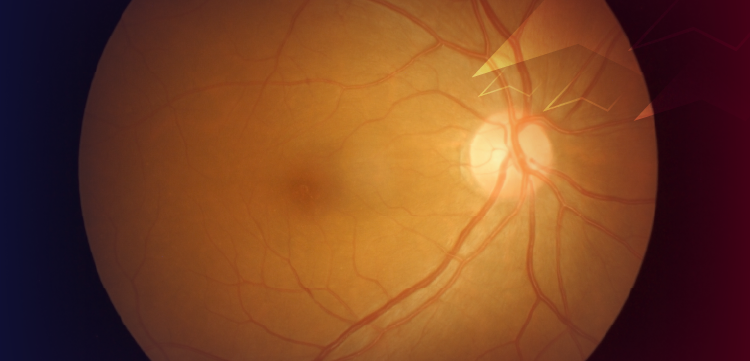

Fundus Photography

Fundus photography is a big area of AI applications in ophthalmology. The Google group has published articles on the use of AI in several areas, including refractive error prediction, cardiovascular risk detection, identification of retinal lesions, and diabetic retinopathy.

An article published in IOVS in 2018, using data from the UK biobank and the AREDS study, looked at attention maps that predicted refractive error, and the refractive error prediction was good.1 One of the figures in the article shows attention maps for myopic, emmetropic (neutral), or hyperopic patients.

Even with the computer pointing out the areas it used to make a diagnosis, it can still be difficult for an ophthalmologist to make a diagnosis. The computer is able to do so.

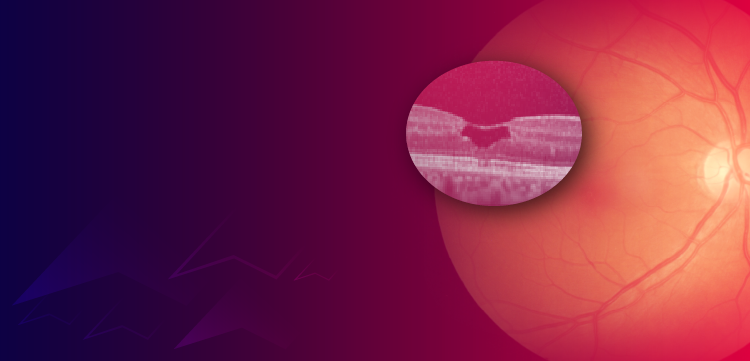

In a landmark article published in Nature Medicine in 2017 the researchers used deep learning architecture to make referral recommendations in a group of OCT scans, done with multiple devices.2

By using tissue segmentation they were able to give a probability of a diagnosis, and accordingly make an urgent, semi-urgent, routine, or observation only referral suggestion.

If “trained” properly, the machine was able to have an error rate in the patient referral decisions of only 5.5%. This was better than 80% of the retina specialists and all of the optometrists, who were given not only the OCT data, but also fundus data and notes.

Dr. Azar also discussed the article he called “transformational.” It was published in JAMA 2016 and focused on using deep learning for diagnosing DR.3 The study results showed very high sensitivity and specificity, and indicated that diagnoses were as good or better than those of the retina specialists who were convened there.

An area receiving the most attention in retinal AI applications is OCT. One study looked at the severity, characterization and estimation of 5-year risk of AMD progression using AI and found that the machine did very well.4

Another group studied the prediction of individual disease conversion in early AMD.5 The authors found the most critical quantitative features for progression were retinal thickness, hyper-reflective foci, and drusen areas. Dr. Azar said, “The interesting part here is this is not only predicting a disease, but also includes discovery.”

Conclusions

There are great applications of AI in ophthalmology, and the uses will continue to expand. But there are several limitations, including:

- The quality and diversity of training sets

- Problems with image quality

- Because the statistics are very good, people may erroneously conclude that the system is not making errors

- The black box effect of convoluted neural networks

Medical Education

More than 100 years ago, the famous Flexner report established the biomedical model of education, training, and research as an enduring basis of medical education.6

Dr. Azar described this as a cross-disciplinary convergence in ophthalmology, but said there are caveats when it comes to the education of ophthalmology fellows, residents, and students. There needs to be an understanding that the knowledge base these machines can provide should only be a foundation to facilitate interpretation of data.

He emphasized the importance of the humanistic elements of medicine-professionalism, communication, empathy, compassion, and respect.

Dr. Azar said these important topics should now be included in the curriculum of all medical students, because much of the information doctors previously needed to learn will be easily obtained through AI.

Disclosures:

Dimitri Azar, MD, MBAE: [email protected]

This article was adapted from Dr. Azar’s presentation at the 2018 Johns Hopkins Wilmer Eye Institute’s Current Concepts in Ophthalmology meeting in Baltimore. At the time of the presentation, Dr. Azar was on the Board of Directors of Novartis, and the Board of Directors of Verb Surgical, and received NIH funding including an RO1 grant. He is currently an employee of Alphabet Verily.

Articles in this issue

almost 7 years ago

Managing unique challenges of pediatric congenital cataractalmost 7 years ago

Telemedicine, teleophthalmology programs in action at Johns Hopkinsalmost 7 years ago

Pharmacologic pipeline makes waves in glaucomaalmost 7 years ago

Exploring correlation between gene expression, cataract morphologyalmost 7 years ago

How deep phenotyping of pediatric corneal opacities targets diagnosisalmost 7 years ago

‘Spark joy’ in your ophthalmic practice with these frame board tipsNewsletter

Don’t miss out—get Ophthalmology Times updates on the latest clinical advancements and expert interviews, straight to your inbox.